Where do great ideas come from?

The best ideas emerge when teams collaborate and people share thoughts without fear of being judged.

Hi, I’m Roy Zornow, founder of Usergoals. I’ve been designing products and user experiences for over 15 years. I strongly agree with Google’s “Project Aristotle”: the most effective teams are not necessarily those with the smartest people, they are those with team members who share, who collaborate, and who respect and care for each other.

My goal is to contribute to this type of team, and ultimately, give people a better experience with technology.

What I do

- UI/UX design

- Agile project management

- Storymapping

- User research and testing

- Interactive prototyping

- Pragmatic personas

- Mentoring

Things I’ve made

- An enterprise-wide campaign management platform

- Apps and responsive websites

- Configurators and trip planners

- Social media integration

- A hands-free in-auto/mobile suite

- An ecommerce application

- Online contests

Influences

- Jeff Patton for Storymapping.

- Agile principles from Jeff, the NYC Scrum User Group and Mary Pratt who runs it.

- Design Thinking from Jeremy Franck at A Faster Horse.

- Luke Wroblewski: form optimization

- Lean UX via the work of Jeff Gothelf

- Colleagues – see the testimonials page.

- Books, websites, conferences, social media – see About Me

The Work

I’ve included traditional work samples in this site, but these case studies below offer more detail and explain the thinking behind each project.

Case study: Responsive Trip Configurator

It’s much easier to take a spontaneous trip when you can “make it up as you go along”.

Case Study: Marketing Campaign Management platform

IBM finally recognizes its most-neglected user, the IBM employee.

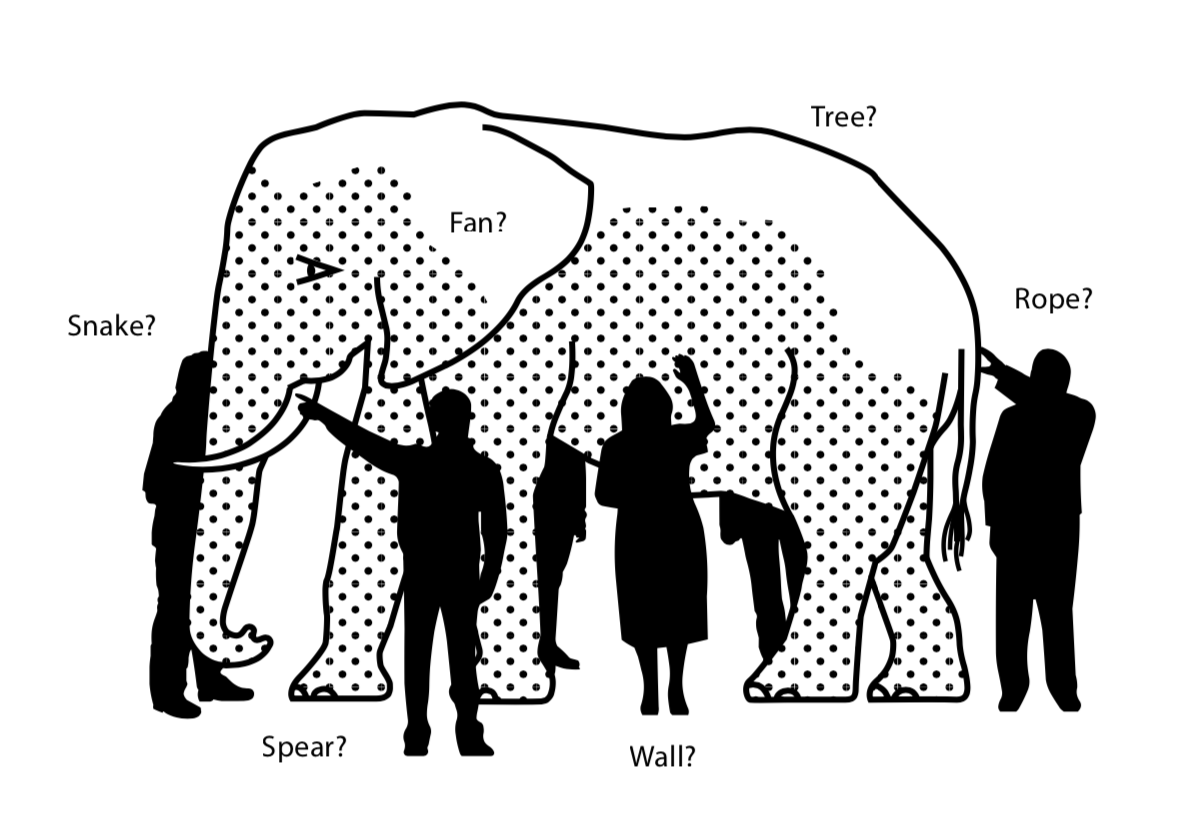

Case study: The Dual Role of UX in the Discovery of Complex Systems

Using UX skills to give your team an overview of a complex system will enhance shared understanding and open up new areas of discovery.

About Me

I’ve worked as a database administrator, intellectual property manager, management consultant, information architect, and Sr. UX designer. I’m the founder of Usergoals, a UX consultancy.

I have experience across multiple sectors: digital media, finance, consulting, government, public relations, big tech, pharmaceuticals and sports.

Educational background: B.S. English literature; J.D. Law.

For more information, see the full About Me page.