When is Discovery done?

When is Discovery done?

It’s uncomfortable to be in the Discovery phase of a large project without an end in sight. One unknown leads to another, like a set of diabolical Russian dolls, and you become circumspect, even though you know your job is to keep digging. Still, I’ve had managers ask me the legitimate question: “How will you know when you are done?”

A thorough Discovery will reveal:

-

- who all your users are

- their context, goals and pain points

- how frequently they interact with the current system

- their level of expertise

- their workarounds

- the other systems they use and how those systems interact (or don’t)

- how the system is designed to work

The Russian doll part here often involves figuring out how the system is designed to work, and why that is not happening. Users can become so accustomed to dysfunctional or nonfunctional systems, that they cease questioning them, like a tree forming a protective gall over an irritant.

For example, users might face frequent character count errors if a form is unable to calculate running character totals. They will then have to click “Submit” to become aware of the overrun.

Why? – “Because this part of the interface was deprioritized due to higher-visibility fixes.”

Why not fix it anyway? – “Because the code base is antiquated.”

Why are you not updating it? – “Because it has to integrate with a different legacy system.”

Why not replace that system? “I don’t know, they were thinking about that a few years ago.”

Meanwhile your boss is yelling: “Hey you!! ! Is that Discovery done yet!??”

You could shorten your discovery time by simply listing “form fields with character counts are subject to frequent errors”. In that case you are just a scribe, jotting down symptoms. If you want to provide value, you’ve got to discover the mechanism that causes user pain. Only then you can intelligently suggest solutions.

There are other approaches to “done-ness”, one is saying that you are never done, the concept of Continuous Discovery . Teresa Torres talks about doing Discovery with a focus on opportunities rather than problems. She has created an Opportunity Solution Tree model to do this. This adds a dimension of complexity, because driving towards an opportunity’s outcome requires a theory – a theory of how things could be. To prove a theory you need run experiments, to answer questions like:

- Which elements of the existing system will be useful?

- Which broken ones do you fix?

- When do you add in new ones?

- Will an improvement degrade another area of functionality?

One can see how this goes beyond traditional Discovery, which is essentially mapping the territory of “what is”. As such, it requires continuous adjustment and effort.

Jeff Gothelf, in his article “When is Amazon Done?” suggests that the way to stay focused when moving this many levers is to keep the customer in mind at all times. Eliminate opportunities that don’t directly help the end-customer.

Amazon was built on this. Although their discovery method (not “methodology” – pet peeve) may be though of as continuous, the way they think about adding value is discrete. It’s not through gradual improvement that Amazon created Prime next-day shipping. They didn’t say “Lets get it to around 46 hours per shipment and see how people react. It’s more likely they said to themselves “What’s the one biggest thing we can provide that will create customer loyalty and what do we have to do to make that happen?” It’s risky to bet so much on one opportunity, but less so when customer value is the North Star.

On a much simpler level, I’ve found that Discovery starts to “feel” done, when your team can start answering their own questions. As part of a shared discovery team, for a UX’er to hear the tech lead say something like: “Almost correct, Roy. Actually a regional manager can re-assign an account, but only a district supervisor can delete one…..” is a sweet sound, and means you are close to reaching the shared understanding we all seek in the Discovery phase.

Requirements = "Shut up"

Requirements = “Shut up”

Agile and User Experience guru Jeff Patton claims that requirements in software development “harm collaboration, stunt innovation, and threaten the quality of our products.” (Jeff Patton – Requirements Considered Harmful)

In person he’s more succinct: “the word ‘requirements’ means ‘shut up’.”

“What?”, you might think, “requirements can always be changed. “

Not always. In my experience, requirements, especially when codified into something like a Product Requirements Document (PRD), can easily become sacrosanct. The document becomes the deliverable, and any thinking that deviates from the required features can encounter a negative bias.

“But what’s so bad about listing what we should build?” Initially I thought it was because software moves too fast but I don’t think that’s it.

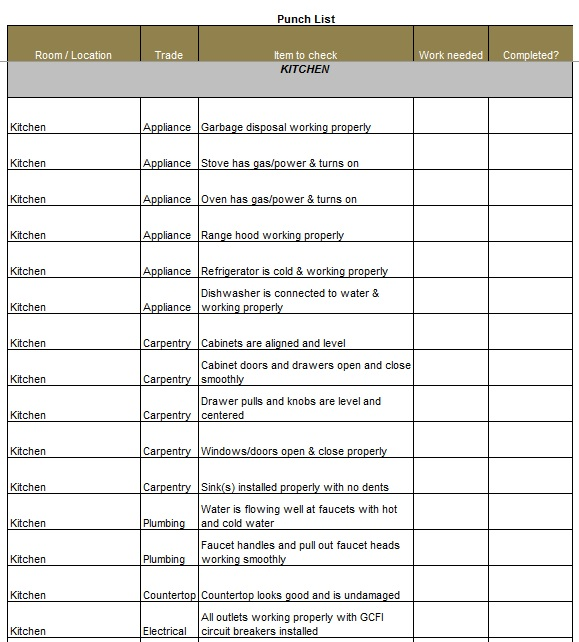

Let’s look at an analog: the punch list. This is a list of items that a contractor needs to confirm have been completed in order to be paid. It might include line items like “stove is connected to gas line”, electrical outlets are providing 110v…” etc.

A punch list works because there aren’t many variables or options when it comes to a stove being connected or not connected. It would not carry over to a project where the concept of having a stove at all was in play.

Let’s say you were designing a personal submarine. User needs are going to emerge as discovery proceeds: Will people need to eat on the submarine? Will they want hot food? Will the heat generated be a problem? You might solve for all these issues only to discover that the people commissioning the submarine, your users, are raw-foodists.

So it’s not that software moves too fast, it’s that formal requirements tend to limit further inquiry, and in software development we are always discovering new things about users and how they work and what they want..

Consulting companies have a legacy of being documentation-heavy. They need to produce “a thing” in order to reify their work, and to justify their fees. A satisfied client is their preferred outcome, not necessarily a satisfied customer.

Jeff Patton uses his own contractor analogy when talking about outcomes:

“For Ed, my kitchen remodeler, me being a happy customer is his desired outcome. Ed isn’t responsible for the ROI of my kitchen. I am. The kitchen wasn’t built to satisfy thousands of users. And whether I really use it, all of it, isn’t Ed’s responsibility.“ (Jeff Patton – Requirements Considered Harmful)

The ROI on software isn’t going to be realized until the initial consulting contract is a distant memory. And building shippable software is an expensive way to do requirements testing.

Furthermore there is a morale cost to a team who discovers alternate ways to add value, or to overcome unexpected roadblocks:

Can’t get access to users to interview in a short time frame?

-

- Value-driven: Pivot to use behavioral data from site metrics analysis.

- Requirement-centered: Cobble together (make up) hypothetical personas so you can check the boxes.

Redesigning a site for a client team with a durable track record of zero innovation?

-

- Value-driven: Uncover the gaping culture problem and address it.

- Requirements-centered: Deliver better navigation categories.

It takes courage to structure a contract that says, in essence, “we will provide value in the way we discover value is best to be provided”. Companies who do this, however, who are holistic yet move quickly, and who can re-organize in light of new information, will win over their more conservative competition.